S2.1 Introduction

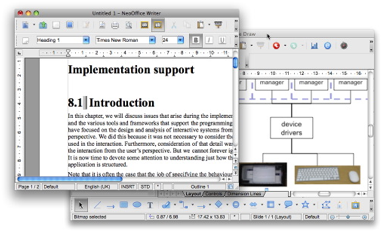

In this chapter, we will discuss issues that arise during the implementation of an interactive system and the various tools and frameworks that support the programming of such systems. So far we have focused on the design and analysis of interactive systems from a relatively abstract perspective. We did this because it was not necessary to consider the specific details of the devices used in the interaction. Furthermore, consideration of that detail was an obstacle to understanding the interaction from the user's perspective. But we cannot forever ignore the specifics of the device. It is now time to devote some attention to understanding just how the task of coding the interactive application is structured.

Note that it is often the case that the job of specifying the behaviour of the interactive system falls to a different person than the role of actually coding it. However, even if you never expect to programme yourself, it is worth understanding some of the issues that occur when that specification is turned into running code.

The detailed specification gives the programmer instructions as to what the interactive application must do and the programmer must translate that into machine executable instructions to say how that will be achieved on the available hardware devices. The objective of the programmer then is to translate down to the level of the software that runs the hardware devices. At its crudest level, this software provides the ability to do things like read events from various input devices and write primitive graphics commands to a display. Whereas it is possible in that crude language to produce highly interactive systems, the job is very tedious and highly error prone; the user-interface developer does not normally want to think about the details of the electronics of the trackpad on a laptop computer or the optical sensors on a mouse. That is there is a need for levels of abstraction that lift the programming from the specific details of hardware to interaction techniques.

The programming support tools that we describe in this chapter aim to move this level of abstraction up from the raw sensors and low-level hardware devices to a higher level in which the programmer can code more directly in terms of the interaction objects of the application. The emphasis here is on allowing the programmer to build the system in terms of its desired interaction techniques, a term we use to indicate the intimate relationship between input and output. Though there is a fundamental separation between input and output devices in the hardware devices and at the lowest software level, the distinction can be removed at the programming level with the right abstractions and hiding of detail.

In the remainder of this chapter, we will address the various layers that constitute the move from the low-level hardware up to the more abstract programming concepts for interaction. We begin in Section 8.2 with the elements of a windowing system, which provide for device independence and resource sharing at the programming level. Programming in a window system frees the programmer from some of the worry about the input and output primitives of the machines the application will run on, and allows her to program the application under the assumption that it will receive a stream of event requests from the window manager. In Section 8.3 we describe the basic management of output and input. We consider the different ways in which the application interacts with the display mediated by the window manager and also at the two fundamental ways the stream of input events can be processed to link the interface with the application functionality. In Section 8.4, we describe the use of toolkits as mechanisms to link input and output at the programming level, allowing coding at the level of interaction objects rather than raw events and screen drawing. In Section 8.5, we discuss the architecture styles, frameworks and development tools that can help structure and guide the construction of a user interface. Section 8.6 looks at the issues that arise when developing for widely different platforms and devices, and techniques that help create code that can adapt to the different capabilities of different devices.

Isn't this just Software Engineering?

If you are a programmer, this might sound as if it is all just standard software engineering, simply applied to the user interface. Obviously HCI affects the design and therefore what is produced; however, the nature of user interfaces can make certain aspects of programming an interactive application more difficult then or at least different from other kinds of coding. That is, HCI also impacts how the design is put together.

One example is the separation between applications in a typical desktop environment. From a software engineering point of view it is important to keep them separate so that if the word processor crashes it does not bring down the web browser and vice versa. However, when the user looks at the screen it is all apparently available – just as in real life you may use your mug to hold open the page of a book, you don't think "mugs are functionally different from pages" you just do it. However, on the desktop even cut-and-paste or drag-and-drop between applications is effectively breaking the functional separation. Within a single application the same issue arises and users expect to be able to freely move between aspects of the user interface even if they belong to different modules of the underlying application.

S2.2 Windowing Systems

In earlier chapters, we have discussed the elements of the WIMP interface but only with respect to how they enhance the interaction with the end-user. Here we will describe more details of windowing systems used to build the WIMP interface. in particular we will describe the ways in which the window system provides:

device independence – hiding some of the differences between different IO devices

resource sharing – allowing multiple applications to use the same keybaord, screen, etc.

application management – swopping control between applications and various cross-application functions such as cut and paste

In order to achieve these things window managers have adapted several different kinds of internal architecture.

S2.2.1 Device independence

A computer may use many different kinds of pointing device (see also chapter 2): mouse, trackpad, joystick; it may even have a touchscreen such as an iPhone or iPad. Similarly there are various different kinds of keyboards from traditional QUERTY keyboards, to multi-tap phone keypads, and software-keyboards on touchscreens. Even 'standard' keyboards come in slightly different layouts in different countries and have more or less special keys such as cursor arrows, or function keys. Furthermore screens come in different resolutions from a few hundred pixels across on a phone to many thousands in a desktop 'cinema' display (Figure 8.1).

Figure 8.1. Devices differ: different keyboards, pointers and screens

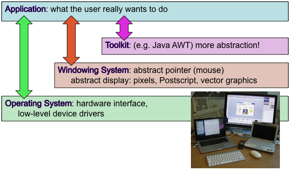

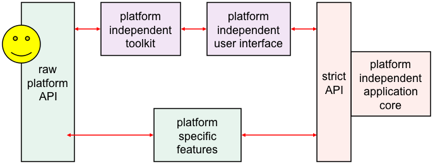

As an application developer you often want to ignore these details as much as is sensible for the nature of the interaction. This abstraction is provided by a number of layers of software (Figure 8.2). These differ slightly in different platforms, but the typical layers are:

operating system – This provides the basic hardware interface, and low-level device drivers.

windowing system – This has a number of functions, but one is to provide abstractions such as an abstract idea of a pointer/mouse and an abstract screen/display model. The display model is often based on pixels, but there are alternatives such as the use of Postscript or vector graphics (see section 8.3.1).

toolkit (e.g. Java AWT/Swing) – These provide higher level abstractions such as components for menus, tabbed displays. Sometimes toolkits themselves come in several levels each adding more abstraction over the layer below.

The application will deal most with the highest level of toolkit (discussed in section 8.4), but typically can access the raw window manager or operating system when the toolkit does not provide everything that is needed. For example, the cut-and-paste support in Java AWT is limited, so for specialised applications you need to create small modules of native code to access the underlying window system clipboard. However, the more applications access underlying windowing systems or operating systems, the more code needs to be re-written when porting between platforms.

Figure 8.2. Layers between application and raw devices

S2.2.2 Resource sharing

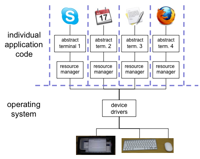

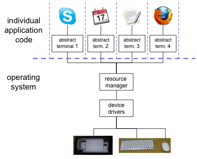

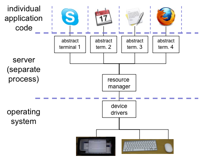

Often you have many applications on a computer, but typically one screen, one keyboard and one mouse. Furthermore, the user has a single pair of eyes and fingers, so that even if you had a screen huge enough to show every possible application the user would not be able to look at them all at once! One job of the windowing system is to share these fixed interaction resources between applications. The windowing system manages this by largely separating out each application, so that for many purposes, each appears to have the computer with all its resources to itself. This is sometimes described as a virtual or abstract terminal (Figure 8.3). The window manager decides which keyboard and mouse events to pass on to each application (sometimes translating coordinates) and deals with overlapping or otherwise interfering windows, and each application reacts to mouse and keyboard events, and displays material on its windows, just as though there were no other applications.

Figure 8.3. Giving each application an abstract terminal

The input devices are usually shared using the idea of input focus. By clicking on a window, or sometimes tabbing between them, the user can choose to use the keyboard to type into different applications, or the mouse to select or point in different windows. Note this is effectively time-based sharing as at any point in tome a single application 'owns' the keyboard and mouse.

For the screen there are several possibilities for window layout:

tiled (space shared) – Here each window/application is given a dedicated portion of the screen and can do what it likes there. This is often found in web sidebars, where widgets are stacked one above another. Note that this is a form of space-based sharing as each application has a part of the screen space. Of course, this has a natural limit when the screen is full. For web sidebars this is partly managed by the fact that the screen can scroll, however also each widget may be able to be hidden or expanded by clicking its title bar, thus giving the user more control over what is seen at any single moment.

Figure 8.4. Tiled

sidebar with expandable widgets

in Wordpress admin screen

single app (time shared) – Some systems do the opposite and dedicate the whole screen to a single application or window, swopping which application gets the screen at any point in time. This was found in early versions of Windows, but is now more common in mobile devices such as phones as the screen is so small anyway that splitting it further would be silly. Note that this is a form of time-based sharing of the resource as at any moment precisely one application 'owns' the screen. The window system needs only have some means to swop between applications. For example, on the iPhone this is achieved by clicking the big button at the bottom and selecting an icon representing the intended application.

overlapping (time and space) – For desktop and laptop PCs, the most common layout is nether of the above, but instead the use of overlapping windows. In this case we have something that has elements of both time and space based sharing as some part of the screen have overlaps and are therefore time-shared (depending on which is on top) and other parts, where smaller windows sit side-by-side, are space shared.

|  |  | ||

| (i) | (ii) | (iii) |

Figure 8.5. Window layout (i) tiled (ii) single app and (iii) overlapping

Of

course it is not just the screen and keyboard that users care about; other aspects

of the device are also shared, especially when thinking about a mobile

device. There is one battery, so

that power management is crucial. Some phone-based operating systems work very like desktop-based ones

with applications running all the time and consequentially running down the

battery! The iPhone is often

criticised for being single-threaded,

but this is almost certainly one of the reasons for relatively long battery

life. The network is also a shared

resource and if one application hogs it may slow down others ... furthermore it

may cost the user in data charges!

Of

course it is not just the screen and keyboard that users care about; other aspects

of the device are also shared, especially when thinking about a mobile

device. There is one battery, so

that power management is crucial. Some phone-based operating systems work very like desktop-based ones

with applications running all the time and consequentially running down the

battery! The iPhone is often

criticised for being single-threaded,

but this is almost certainly one of the reasons for relatively long battery

life. The network is also a shared

resource and if one application hogs it may slow down others ... furthermore it

may cost the user in data charges!

S2.2.3 Application management

There are many applications! While they typically have control of what happens inside their windows, the window manager provides a consistent look-and-feel to the window 'decoration', the borders, resizing controls, title bar. As an application programmer you simply create a window and occasionally get events such as 'resized' or 'close', but otherwise the windowing system worries about what they look like and how they behave.

The window system also takes responsibility for many aspects of inter-application sharing such as cut & paste and drag & drop. As an application developer there are typically calls to the windowing systems to say "here is some data of this type for the clipboard", and the windowing system will provide events such as "data of this type has been dropped here". The window system also manages a degree of negotiation between the application providing data (where it was cut/copied or dragged from) and the application using it (paste or drop location). Some windowing systems also provide a level of scripting or automation between applications, for example the Mac Automator.

Finally, the windowing system has to provide some form of user interface to mange things like swopping between applications, closing windows, selecting the keyboard focus, or arranging overlapping windows and setting various global user preferences (e.g. Mac Finder, Windows Explorer). As well as 'big applications', even desktop interfaces now often have additional micro-applications such as the Mac Dashboard, which also need means for activating, etc.

S2.2.4 Architecture of window systems

Bass and Coutaz [29] identify three possible architectures for the software to implement the roles of a windowing system. All of them assume that device drivers are separate from the application programs. They differ as to where they place the main window management role.

replicate in applications – The first option is to replicate the code for managing multiple processes within each of the separate applications (Figure 8.6.a). This is not a very satisfactory architecture because it forces each application to consider the difficult problems of resolving synchronization conflicts with the shared hardware devices. It also reduces the portability of the separate applications.

centralise in the OS kernel – The second option is to implement the management role within the kernel of the operating system, centralizing the management task by freeing it from the individual applications (Figure 8.6.b). Applications must still be developed with the specifics of the particular operating system in mind.

distribute in separate process (client–server) – The third option provides the most portability, as the management function is written as a separate application in its own right and so can provide an interface to other application programs that is generic across all operating systems (Figure 8.6.c). This final option is referred to as the client–server architecture, and has the added advantage that it can operate easily over networks.

(a) Replicated

(b) Centralised

(c) Client–server

Figure 8.6. Different window manager architectures

In practice, the divide among these proposed architectures is not so clear and any actual interactive application or set of applications operating within a window system may share features with any one of these three conceptual architectures.

The early versions of Mac OS and Windows assumed 'cooperative' applications', which, whilst not entirely replicated, did mean that each application was responsible for some window management functions. In the case of Mac OS X this allowed very efficient screen rendering, important for early graphics applications, albeit at the risk of freezing if an application failed.

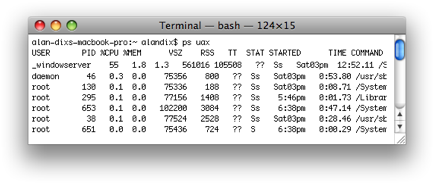

Later versions of both Mac OS X and Windows follow more closely the centralised architecture, giving them far greater control over applications. However, whilst the programmer on both platforms sees the window manager and kernel as if they were one, in fact digging deeper the window manager is often separated out. Figure 8.7 shows a listing of the processes on a Mac OS X computer. The window manager (_windowserver) can clearly be seen as a separate process. That is there are some elements of client–server operation internally, even if not obvious to the user or programmer.

Figure 8.7. Mac OS X – window manager running as separate process

Even in a pure client–server window manager, there may be one component that is a separate application or process together with some built-in operating system support and hand-tuned application support to manage the shared resources. So applications built for a window system which is notionally based on the client–server model may not be as portable as one would think.

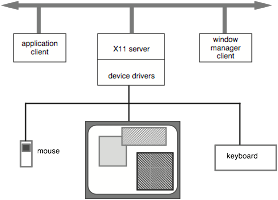

The classic example of a window system based on the client–server architecture is the industry-standard X Window System (Release 11), developed at the Massachusetts Institute of Technology (MIT) in the mid-1980s, and most familiar now on LINUX-based systems. Figure 8.8 shows the software architecture of X. X (or X11) is based on a pixel-based imaging model and assumes that there is some pointing mechanism available. What distinguishes X from other window systems, and the reason it has been adopted as a standard, is that X is based on a network protocol that clearly defines the server–client communication. The X Protocol can be implemented on different computers and operating systems, making X more device independent. It also means that client and server need not even be on the same system in order to communicate to the server. Each client of the X11 server is associated to an abstract terminal or main window. The X server performs the following tasks:

- allows (or denies) access to the display from multiple client applications;

- interprets requests from clients to perform screen operations or provide other information;

- demultiplexes the stream of physical input events from the user and passes them to the appropriate client;

- minimizes the traffic along the network by relieving the clients from having to keep track of certain display information, like fonts, in complex data structures that the clients can access by ID numbers.

Figure 8.8 The X Window System (Release 11) architecture

A separate client – the window manager – enforces policies to resolve conflicting input and output requests to and from the other clients. There are several different window managers that can be used in X, and they adopt different policies. For example, the window manager would decide how the user can change the focus of his input from one application to another. One option is for the user to nominate one window as the active one to which all subsequent input is directed. The other option is for the active window to be implicitly nominated by the position of the pointing device. Whenever the pointer is in the display space of a window, all input is directed to it. Once the pointer is moved to a position inside another window, that window becomes active and receives subsequent input. Another example of window manager policy is whether visible screen images of the client windows can overlap or must be non-overlapping (called tiling). As with many other windowing systems, the client applications can define their own hierarchy of sub-windows, each of which is constrained to the coordinate space of the parent window. This subdivision of the main client window allows the programmer to manage the input and output for a single application similar to the window manager.

To aid in the design of specific window managers, the X Consortium has produced the Inter-Client Communication Conventions Manual (ICCCM), which provides conventions for various policy issues that are not included in the X definition. These policies include:

- rules for transferring data between clients;

- methods for selecting the active client for input focus;

- layout schemes for overlapping/tiled windows as screen regions.

S2.2.5 Not just the PC

Issues of application management and architecture are not just issues for PCs, but any platform where there are multiple applications:

phone – Faces similar issues to the PC sharing screen, keyboard, etc.. As noted the choice is usually to go for 'full screen' apps, not overlapping windows, however Vodafone 360 has semi-open apps, which take up several 'tile' locations in the screens showing application icons.

web – In web applications the browser in many ways takes to role of window manager. The browser may make use of he window system's ability to have several browser windows open, but within a window space is usually managed using tabs, which are effectively a form of space-based sharing.

web micro-apps – Various web platforms allow the user to add micro-applications such as Facebook apps and Google widgets. The platform may offer these access to shared data (e.g. Facebook friend's birthdays) and have to manage screen space, often using a combination of space-shared columns and time-shared tabs.

dedicated devices (e.g. microwave control panel) – These are mostly coded direct to hardware as they have very bespoke input and output. However, there are appliance-oriented variants of Java and Windows providing some higher-level abstractions.

S2.3 Programming the Application

S2.3.1 Paint models

When you want to put something on the screen it goes through all the layers referred to earlier. Your code interacts with a toolkit (say Jave AWT/Swing), which then passes this on to the window manager, which then manages things like overlaps with other application windows before interacting through the operating system and device drivers to actually paint pixels on the screen (see Figure 8.9).

Figure 8.9. Screen painting layers

Systems and toolkits differ in what you actually draw to:

direct to the screen – The simplest is when the application are given direct access to screen pixels. This is clearly most efficient for high-throughput graphics, such as vide replay, but has problems if the application misbehaves and starts to draw to areas of the screen that it shouldn't such as other applications' space or the windowing system's own UI elements.

direct to screen via viewport – The windowing system may exert a little more control by only allowing access through a 'viewport'. This means that when the application asks to draw pixels the output may be clipped if it is outside the allowed region, or if part of the window is currently covered by another window. This might also include coordinate transformations o that the application can effectively draw in a window with x and y coordinates 0-199, but have the window really positioned in the middle of the actual screen. For example, Java AWT works in this manner.

separate buffer – Sometimes instead of writing instantly to the screen the applications drawing operations are applied to a buffer in memory and only when this is finished is the whole buffer written to the screen. This may happen at the level of toolkit or and/or underlying window system and is normally called double buffering in a toolkit or retained bitmap in the windowing system. The latter we deal with later. The reason for double buffering at the toolkit level (whether or not the windowing system has a buffer), is to reduce flicker. Without double buffering it may be that the user sees a screen half-drawn, whereas double buffering means that the entire window instantly swops from the old to the new buffer. This is an option in Java Swing.

display list – Instead of working with the screen as an array of pixels, some systems store a list of operations recording, say, that there should be a line, image or text at a particular location on the screen. The toolkit then worries about showing these on the screen. This means that the application can simply change the display list when screen elements need to be updated, rather than redraw the whole screen. Also it allows the toolkit or window system to perform optimisations and hence is used in some high-performance graphical toolkits including OpenGL as well as older standards such as GKS (Graphics Kernel System) and PHIGS (Programmers Hierarchical Interface to Grahics).

page-description language – These are dedicated languages/notations used to describe the appearance of a page of graphics and text. The most commonly known are PDF and PostScript (both developed by Adobe Corporation). Both were originally developed for static printed pages, but have later been adapted for interactive displays (in NeXT and Mac OS X respectively). They model the screen as a collection of paths which serve as infinitely thin boundaries or stencils which can be filled in with various colors or textured patterns and images.

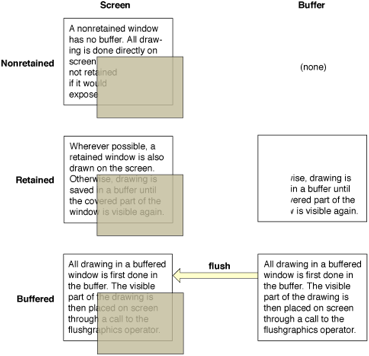

Buffering may also be used by the window system to store parts of the application window in order to more quickly update the screen when the user is swopping between overlapping windows. For example Mac OS X offers applications the choice of three levels of buffering which differ largely in how they cope with overlapping or translucent windows:

nonretained – This is the simplest options, the window manager remembers nothing and whenever a part of the application window that was hidden is later exposed the application is asked to redraw the previously hidden portion. This works best if the contents of the application window are changing very rapidly as any hidden parts will need to have fresh contents anyway.

retained – Where the window manager buffers just the hidden parts of overlapping windows. This means that when the window is later exposed the hidden part can be instantly drawn. Note that 'hidden' here includes being covered by a translucent overlay which may later move. (Note, this option was withdrawn in Mac OS 10.5, in favour of buffered below).

buffered – Here the window manager keeps a copy of the entire window, both hidden parts and non-hidden parts. This takes most memory, but gives the maximum responsiveness if, for example, the window is itself translucent and is dragged over other windows.

Fig 8.10. Buffering options in Mac OS X [Ap10]

There are different reasons why the screen needs to be redrawn:

internal events – Sometimes it is an event inside the application which leads to the needs to update the screen. For example, in a clock the digits need to change, or if downloading a large data file the progress indicator may need to update. In the case of internal events the application 'knows' that the screen has changed, but may need to tell the toolkit and ultimately the window manager.

external events – Alternatively the event may be due to something the user did to the application. For example the user might have clicked the 'bold' icon and the currently selected word needs to be emboldened. In this case it is the window manager that first 'knows' that the user ahs clicked the mouse, then passes this to the toolkit, which may sometimes respond directly (e.g. during navigation of a menu) or pass it on to the application.

However, just because the screen needs to be updated does not mean the update happens at that moment. For example, if there were many updates within a few tens of milliseconds, it would not be worth updating the screen several times as this would all be within a single display frame.

internal control – This is probably the easiest option to understand. When the application wants the screen changed it simply tells the toolkit/window manager that something needs to be updated. This has the problem noted above of potentially wasted updates, or taking time redrawing the screen when maybe user input is queued up needing to be processed. However this method works fine if there is some sort of intermediate representation such as the display list or a retained bitmap as then the actual display is only updated once per frame.

external control – In this case the toolkit / window manager decides when it wants a part of the screen to be updated (for example, when a hidden part is exposed) and asks the application to draw it. In Java this is what happens in a 'paint()' method. However, while this works easily for externally generated events when the window system 'knows' that a change is required, there is of course a problem for internally generated change. This is the purpose of the 'repaint()' method in Java; the application is saying to the toolkit "please repaint my window sometime when you are ready". At some later point, often when the input event queue is empty, the toolkit calls the applications 'paint()' method and screen is updated.

draw once per frame – This is a variant of external control used principally in video-game engines where much of the screen updates in each frame (e.g. first person shooters, or car racing). Once per frame the application is asked to redraw the screen. If event happen between these calls the application usually just updates some internal state but does not update the screen itself. Then when it is asked to redraw itself, the application takes the current state, maybe polls the state of joystick buttons, and generates the new screen.

S2.3.2 Event models

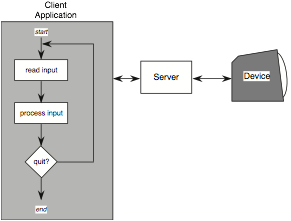

We now concentrate our attention on programming the actual interactive application, which would correspond to a client in the client–server architecture of Figure 8.6.c. Interactive applications are generally user driven in the sense that the action the application takes is determined by the input received from the user. We describe two programming paradigms that can be used to organize the flow of control within the application. The windowing system does not necessarily determine which of these two paradigms is to be followed.

The first programming paradigm is the read–evaluation loop, which is internal to the application program itself (see Figure 8.4). Programming on the Macintosh follows this paradigm. The server sends user inputs as structured events to the client application. As far as the server is concerned, the only importance of the event is the client to which it must be directed. The client application is programmed to read any event passed to it and determine all of the application-specific behavior that results as a response to it. The logical flow of the client application is indicated in the leftmost box of Figure 8.11. In pseudocode the read–evaluation loop would look like the following:

repeat read-event(myevent) case myevent.type type_1 : do type_1 processing type_2 : do type_2 processing . . . type_n : do type_n processing end case end repeat

Figure 8.11 The read–evaluate loop paradigm

The application has complete control over the processing of events that it receives. The downside is that the programmer must execute this control over every possible event that the client will receive, which could prove a very cumbersome task. However, where this is the chosen method, suitable tools can greatly ease the process. For example, early Macintosh user interfaces were constructed in this way, but the MacApp framework automated many of the more tedious aspects.

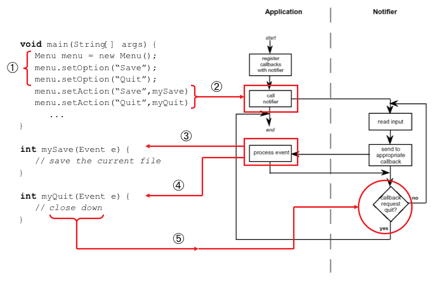

The other programming paradigm is notification based, in which the main control loop for the event processing does not reside within the application. Instead, a centralized notifier receives events from the window system and filters them to the application program in a way declared by the program (see Figure 8.12). The application program informs the notifier what events are of interest to it, and for each event declares one of its own procedures as a callback (also called listener) before turning control over to the notifier. When the notifier receives an event from the window system, it sees if that event was identified by the application program and, if so, passes the event and control over to the callback procedure that was registered for the event. After processing, the callback procedure returns control to the notifier, either telling it to continue receiving events or requesting termination.

Figure 8.12 The notification-based programming paradigm

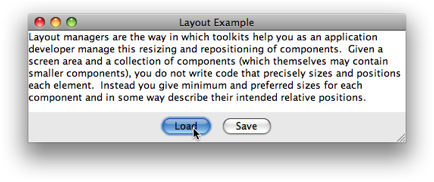

Figure 8.13 shows a fragment of pseudocode using the notification-based paradigm. In step (1) the application creates a menu and adds to options 'Save' and 'Quit'. Then at step (2) it tells the notifier to associate the callback function mySave with the 'Save' option and myQuit with the 'Quit' option. Later at (3) when the user selects the 'Save' option, the notifier calls the mySave method which would then do what is needed to save the current document, and when it returns the notifier goes on looking for more events to process. Later again (4) the user selects the 'Quit' menu option and the myQuit method is called. This would do any tidying up, perhaps close temporary files, but also (5) call some function on the notifier to tell it to stop processing. When it returns the notifier knows to stop and ends the program.

Figure 8.13 Fragment of pseudocode using notification paradigm

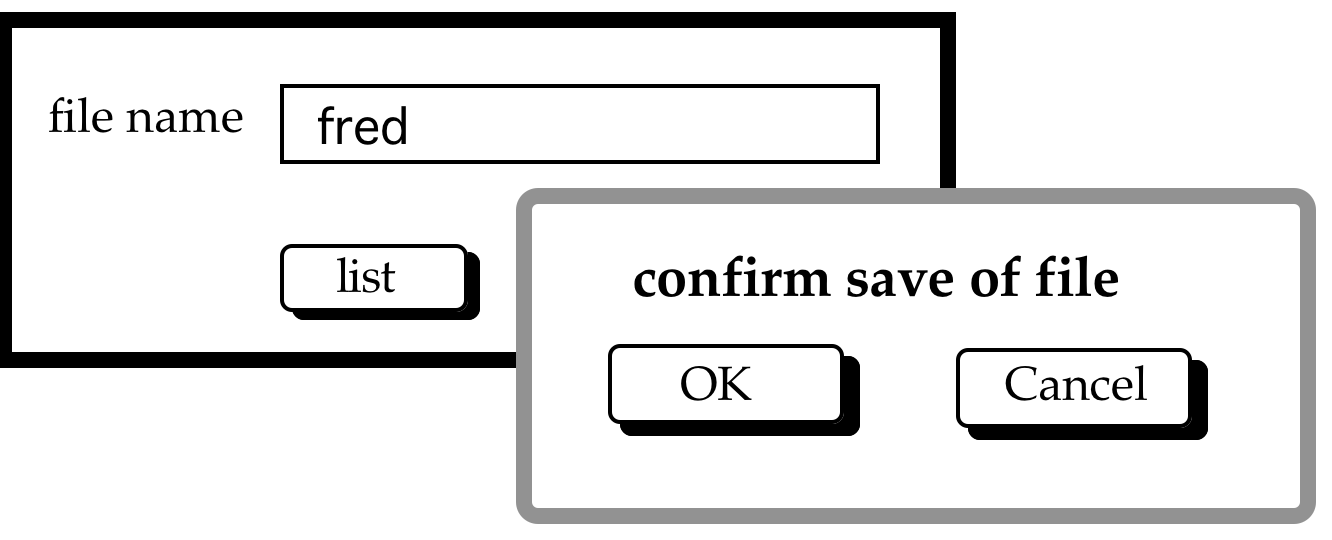

Control flow is centralized in the notifier, which relieves the application program of much of the tedium of processing every possible event passed to it by the window system. But this freedom from control does not come without a price. Suppose, for example, that the application program wanted to produce a pre-emptive dialog box, perhaps because it has detected an error and wants to obtain confirmation from the user before proceeding. The pre-emptive dialog effectively discards all subsequent user actions except for ones that it requires, say selection by the user inside a certain region of the screen. To do this in the read–evaluation paradigm is fairly straightforward. Suppose the error condition occurred during the processing of an event of type type_2. Once the error condition is recognized, the application then begins another read–evaluation loop contained within that branch of the case statement. Within that loop, all non-relevant events can be received and discarded. The pseudocode example given earlier would be modified in the following way:

repeat

read-event(myevent)

case myevent.type

type_1:

do type_1 processing

type_2:

. . .

if (error-condition) then

repeat

read-event(myevent2)

case myevent2.type

type_1 :

.

.

.

type_n :

end case

until (end-condition2)

end if

. . .

.

.

.

type_n:

do type_n processing

end case

until (end-condition)

In the notification-based paradigm, such a pre-emptive dialog would not be so simple, because the control flow is out of the hands of the application programmer. The callback procedures would all have to be modified to recognize the situations in which the pre-emptive dialog is needed and in those situations disregard all events which are passed to them by the notifier. Things would be improved, however, if the application programmer could in such situations access the notifier directly to request that previously acceptable events be ignored until further notice.

Design Focus

Going with the grain

It is possible to use notification-based code to produce a pre-emptive interface dialog such as a modal dialog box, but much more difficult than with an event-loop-based system. Similarly, it is possible to write event-loop-based code that is not pre-emptive, but again it is difficult to do so. If you are not careful, systems built using notification-based code will have lots of non-modal dialog boxes and vice versa. Each programming paradigm has a grain, a tendency to push you towards certain kinds of interface.

If you know that the interface you require fits more closely to one paradigm or another then it is worth selecting the programming paradigm to make your life easier! Often, however, you do not have a choice. In this case you have to be very careful to decide what kind of interface dialog you want before you (or someone else) start coding. Where the desired interface fits the grain of the paradigm you don't have to worry. Where the desired behavior runs against the grain you must be careful, both in coding and testing as these are the areas where things will go wrong.

Of course, if you don't explicitly decide what behavior you want or you specify it unclearly, then it is likely that the resulting system will simply run with the grain, whether or not that makes a good interface.

S2.3.3 Putting it together – events in Java: what happens when you click?

We have seen that there are several different options for screen painting and event processing. We now work through the detailed steps that happen when an event is processed in the Java AWT/Swing user interface toolkit.

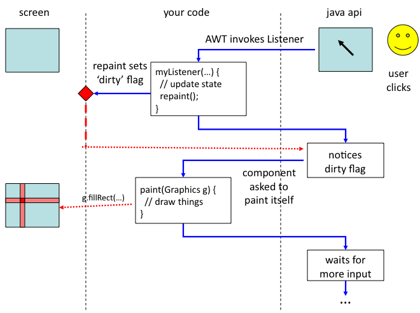

Underlying the way Java UIs work are two main kinds of activity, one responsible for processing events, such as mouse movement or keyboard input, and one for updating the screen, just as we have described in Section 8.3.1 and 8.3.2. In particular Java AWT/Swing adopts external control for screen painting and the notification-based paradigm for event management

We are going to pick up this story after listeners (callback) have been attached to events and go through the series of things that happen.

Most of your code to 'do things' will run as part of event processing, and if you use standard Swing components you may never directly write code that executes during screen painting … it is only when you need to produce a custom component and need to use direct graphics calls to draw lines, etc. that you may need to create a custom 'paint' method.

We'll go through the cycle of activities that typically occur when a user clicks the mouse. We will see the flow of between your own code and the parts of the Java API responsible for events and for screen painting. Figure 8.14 shows an overview of this process and we'll go through each step in detail.

Figure 8.14 The event-paint cycle

Stage 1 – the user clicks

When the user presses or releases a mouse button, moves the mouse or types on the keyboard an event is generated deep in the system. At the operating system level this is first channelled to the right application depending on what windows are visible, which application has control of the keyboard etc.

Assuming this is your java application, this eventually ends up in the Java runtime environment, which does a similar job deciding which component the event should be directed to. It needs to take into account that components may be placed on top of one another (e.g. when a combo-box menu hides part of the panel beneath) or not be active (e.g. in tabbed panels).

Stage 2 – a listener is called

Having found out which component is to receive the event, the Java runtime looks up the relevant registered Listener for the event. So, if you have added a MouseListener then this will be found if the event is a mouse press/release or if the mouse is dragged into or out of the component. If no listener is found for the event a default behaviour is performed – sometimes to ignore it, sometimes to pass the event to the component containing the target (e.g. if the component has been added to a JPanel).

If you have registered a listener object for the event, then the appropriate method is called. In the case of a mouse click for a MouseListener object, the mouseClicked() method is invoked and your code starts to execute.

Stage 3 – doing your own things

Now your code gets to execute and this will typically mean updating some aspect of your internal state (or Model), setting variables, updating data structures etc. You may just be updating standard Swing components, perhaps setting putting a String into a JTextField – however this effectively updates the state of these components.

Note however, that your code inside the method is being run in the Java UI thread. This means that while it is executing no other user input can be processed (although events such as keypresses, mouse clicks etc. will be queued up to be dealt with later). This is quite a good thing – if this were not the case and a second user action happened before the first was complete you would have the second event being processed while the first was half way through – just imagine what would happen to your data structure!

Happily you are spared this problem, because there is a single UI thread all the events are serialised and the methods in your code to deal with them get executed one at a time in the right order.

However, there is a counter problem: if you do lots of computation in your event handlers, the user interface will freeze until you are done (haven't you see applications just like that!). Normally this is not an issue if you are just updating the odd variable etc. However, if you do really large amounts of computation (e.g. run a simulation), or need to access external resources (read a file, access a database, grab a web resource), then there is a danger that the interface may hang.

You can avoid a hung interface by launching your own thread to perform complex calculations, wait for network things to happen, etc. (see Section 8.3.4) – but if you do this then you need to be careful about synchronising this with the Java UI thread which manages events and screen painting … so let's assume the actions to perform are simple!

Stage 4 – calling repaint

Normally the effect of the event is to change something that requires the screen to be updated. If not why not? If something has been done then the user needs to know about it! (Recall the principle of observabilty and also "offer informative feedback" in Chapter 7) The possible exception would be where the event for some reason had no effect, perhaps clicking over an inactive button … in which case does the button clearly show it is inactive?

Assuming the screen does need to be updated, you may naturally feel you want your code to start writing to the screen: drawing lines, boxes, displaying text. However, in Java and many UI toolkits and environments you do not do this directly at this point. Instead, this is left to the screen painting activity. However, you do need to tell the runtime system that the screen requires updating and to do this you call the 'repaint()' method on components that need to be redrawn.

In the case where you are sub-classing a standard component (most likely JComponent or JPanel), this means you just run 'repaint()' and the repaint method of 'this' is called.

Note that the repaint() method does not actually repaint the screen! In fact all it does is set an internal 'screen dirty' flag that tells the Java runtime that the screen needs to be updated.

If you are using standard Swing components you may never call repaint() directly, but when, for example, you set the text in JTextField, internally the setText() method will call repaint(). Also if you use a Model-View-Controller model, you may again not call repaint() directly in your Listener, but it will update the Model, the Model will tell the View that it has changed and the View will call repaint()!

Note that when you update several components, repaint() will be called several times. The system underneath keeps track of this and builds a list of all the parts of the screen that need to be repainted. Also, if you are calling repaint() and only a small part of your component has changed, you can give it a bounding rectangle to tell it that only a part of it needs to be repainted; that is specify a rectangle that includes all areas of the screen that need to be repainted.

Stage 5 – UI waiting

Often repaint() is the last thing that happens in your listener, but need not be. However, when your listener has finished it returns. At this point the UI thread will catch up on any missed user events (if your listener did do lots of computation and took a long time!) calling the relevant listeners in order, but most often there are none and it simply waits for more user interaction.

Stage 6 – the paint thread enters the action

When there are no further user events queued events waiting, Java looks to see if the 'dirty' flag has been set and if so knows the screen needs updating.

Rather like with event management, it needs to work out which components need to be repainted and then asks each component to draw itself on screen by calling its paint() method. If there are several overlapping components it will draw them backmost first, so that the foremost component gets drawn on top.

Note that repainting may also occur when the events are internally generated, such as receiving a network message, or externally generated due to user actions that are not obviously to do with the application, such as resizing a window, or exposing it by closing another window.

Stage 7 – component paint thyself

Eventually your component gets to actually draw itself on screen. For standard Swing components this all happens in the Swing code, but if you want to do something special you can override the default paint method and write your own.

In the case of a simple component you can override paint() directly, but if you are creating a custom component that may contains other components (e.g. if you want a standard button on your custom component), then instead you may override paintComponent(). The default paint method calls this first to paint the background and then one by one calls the paint() method on its sub-components.

Your paint method is passed a Graphics object. This is effectively a 'handle' or way of accessing the portion of screen to paint to, although often is an off-screen buffer that is copied to the screen proper when you have finished.

The Graphics object can be drawn onto with lines, geometric shapes, text and images (there be dragons!).

The model while you are in paint() is of adding things one on top of another. If you draw some text and then draw a rectangle overlapping the text, the rectangle will cover the text (unless it is drawn in a translucent colour).

However, note that if you draw a rectangle on screen when paint is called one time and do not draw it when it is called again, the original rectangle will disappear – the model is that just before paint the relevant area of screen is wiped clean; you start with a blank canvas every time. This is why it important that you maintain a model of your internal state (whether this is a special class or just some variables), which you can refer to when painting the screen.

In most toolkits including Java AWT/Swing, anything you draw is clipped to the region the paint thread wants redrawn. This means you do not have to worry about drawing things near the edge of the screen that might draw outside its borders, or when your window is partially obscured.

However, when you have a very complex screen, you may want to use this fact and not bother to draw things that will fall outside the area being repainted. To do this you can look at the Graphics object and ask for its clipping region. However, you have to be careful to redraw everything that overlaps the region otherwise parts of things will disappear from screen. For even moderately complex screen layouts it is often easier to simply redraw everything.

… but do remember back-to-front drawing order.

Stage 8 – and so to rest

The paint() method returns. If the Graphics object was actually pointing to a temporary off-screen buffer, this is copied to the screen and the paint thread waits for the screen to be again marked dirty by repaint(), and the UI thread waits … all is peaceful in the world of the Java GUI … until the next user interaction!

S2.3.4 Doing several things at once: asynchrony and threads

The simplest kind of user interface proceeds as a turn-taking dialogue:

1. user does something

2. window system passes event to application

3. application does some processing of the event

4. application updates the screen

5. window system waits for next user action

Notice that the sequence of events in the Java AWT/Swing toolkit in the last section is a little more complicated. If the user actions produce many events (e.g. rapid typing or dragging the mouse), then it is possible for the simple turn-taking approach to get left behind. Occasionally this is evident in some applications that 'freeze' for a while and then produce a whole series of screen updates as they catch up on the users mouse clicks and keystrokes. To deal with this situation, Java only updates the screen when there are no more events waiting; that is it performs steps 2 and 3 repeatedly for each queued event and only does the screen update (step 4) when it has cleared the backlog.

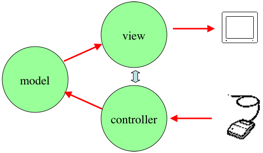

This leads to a style of coding where the code for step 3 updates some part of the application state (e.g. appending a typed character to a document) and the code for step 4 updates the display based on the current state. We will see in the next section how this style of programming fits well the MVC architectural style.

This works very well for applications such as a word processor, where the screen just needs to show the current state of the document, but is slightly more complicated when some animation or transition is required.

One way to deal with this would be to have the state-update code set some sort of animation_required flag and then screen-update code be something like:

if animation_required flag is set

for each frame of the animation

set screen to the frame

wait 40 milliseconds

clear animation_required flag

However, this could mean that if the user did anything else their input would be ignored until the animation was complete – if this was playing a video it could be a very long wait!

Happily many of the occasions you would need to do this, such as video replay, are likely to be handled by the toolkit or operating system by specialised functions. However, sometimes you need to do this yourself.

If so, to allow user input during the animation, user interface code instead sets a timer (say for 40 milliseconds) and registers a callback for the timer (just like the callback for a user event). The toolkit or window manager then generates a timer event every 40 milliseconds and the callback method is called. The application code in the callback then simply updates the current animation frame every time it gets a timer event.

Recall the 'draw once per frame' display update method described in section 8.3.1, which is used in some game engines. This is effectively an extreme variant of this where such timers drive all update, because the whole game is effectively one continuing and evolving animation.

Note that instead of your code being effectively dealing with one user action at a time and finishing with it, it is now dealing with potentially several things at the same time as at one moment it is updating an animation, the next dealing with a user keystroke, then returning to a bit more of the animation. That is there are several interleaved streams of activity going on at the same time. If, as in a game, the animation depends on things the user is doing (e.g. driving speed), then these interleaved streams of activity may interact.

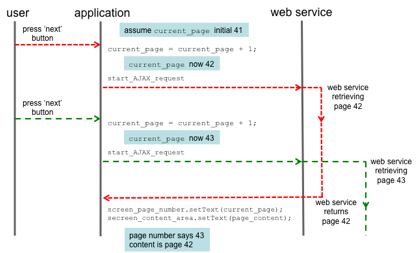

As well as animations in the screen-update code, this form of interleaved coding can also happen in the state-update part of the user interface code, especially when some sort of network activity is required. In particular, this occurs in web interfaces that use AJAX (Asynchronous JavaScript and XML) [Ga05]. In an AJAX based system, the JavaScript code initiates a request for a web service, but typically does not wait for the reply. Instead it registers a callback, which gets called when the web service has returned a result (or maybe failed in some way).

For example, suppose you have web interface that displays the pages of an ebook. You might think of the code as follows:

current_page = 1;

next_page_button.click( process_next ); // set callback

function process_next() {

current_page = current_ page + 1;

page_content = << get text for current_page >>

page_number_on_screen.setText( current_page );

content_area_on_screen.setText( page_content );

}

If the full text of the book has been put in an array when the page was produced, the part of the code written "<< get text for page_num >>" may just be to access the array:

page_content = book_pages[ num ];

If so the style of the code fragment would work fine. However, this would only be likely to work with small books, and at some stage you may want to have the pages retrieved from the server using AJAX. Now the code that seemed to all be one gets split in two:

current_page = 1;

next_page_button.click( async_process_next ); // set callback

function async_process_next() // assume attached to the 'load' button

{

current_page = current_page + 1;

request_uri = "http://ex.com/api/page/" + current_page;

// register callback and initiate request

start_AJAX_request( request_uri, finish_process_next );

}

function finish_process_next( response ) {

page_content = response.getTextContent();

page_number_on_screen.setText( current_page );

content_area_on_screen.setText( page_content );

}

Again you need to be very careful in such applications. First you need to be careful because, as above, you have to split code that in your mind belongs together into several pieces broken by the AJAX calls. Second you have to be careful as the user may perform fresh actions, invoking more of your event callbacks, before the web service response is received.

Figure 8.15 shows a possible timeline of the code above. The user presses the 'next' button twice in close succession. Initially the current page is 41. The application updates this to 42 and then initiates an AJAX request for page 42. However, before this call returns the user's second 'next' button press is processed, so the page number is updated to 43 and a second AJAX request initiated. So, when the response to the first request is received, the page number says 43 even though the page that gets displayed is page 42.

After a while the second request would return, page 43 contents would be displayed and things would be consistent again, but in the mean time the display would be very confusing.

Figure 8.15 Possible timeline of asynchronous code

Even worse, if the AJAX request for page 43 returned more quickly than the first request, the system might first display page 43 and then a few moments later display page 42 when the first request completes. This time the screen would never become consistent and continue to show page 42 with the page number saying 43. To confuse the user more, if the user then presses next again, the page number would move on toe page 44 and page 44 would be displayed, missing page 43 entirely.

This sort of situation can be avoided with careful coding. In particular, you can usually associate additional information with an AJAX call, so that when you receive the response you know precisely which request it corresponds to. However, care is still required to avoid creating inconsistent or buggy results.

When you test your code the web service is often local or on a fast connection, so that you never experience this interleaving of user actions with the send and receive of the web service call. It is only, when your code is deployed and running on a slower connection, or where the web server is heavily loaded, that this interleaving occurs and your code breaks! Similar issues occur for multi-user code and will be discussed in Chapter 20.

Even if all animations and network access is managed through asynchronous callbacks, still the application may need to perform some very complex and time-consuming calculation or process a very large data file. So, the user interface could still freeze while this happens. When there are potential delays like this, you should display a progress indicator, but even doing this is a problem. Going back to the five steps at the beginning of this section; step 4 (screen update) would not happen until step 3 (state update) is completed, so the progress indicator would not appear until the work was complete!

One way to deal with this is to split you complex calculation into lots of small chunks (e.g. reading a few hundred lines of the big file per chunk). At the end of each chunk you set a timer for a very short time (maybe one millisecond). If there are no other events queued you just run straight away, but if there are they get a chance to be processed. If the code is simple (albeit long!) loop, then splitting the code is usually straightforward. However, if the code has loops within loops, or many embedded levels of function calls, things can be more complicated and you end up 'inverting' the code turning the implicit state of loop variables and the call stack into explicit state that you manage yourself. If the code you are using is in third-party libraries, then you cannot break these down at all.

Some platforms have a special system call such as yield() which means "if you want to process some other events do it now", which solves the inversion problems, but not that of third-party libraries.

An alternative, which is available in Java and many other platforms (but not most JavaScript) is threads. This means that several pieces of code can run effectively at the same time, or even the same piece of code may be executing twice concurrently! When a user event requires a lot of work (perhaps reading the large file), a separate thread is created and the complex calculation is performed in this thread. The event processing method can then return and the normal user interface thread keep on processing new user events while the calculation thread proceeds at the same time.

Threads take away the problem of needing to invert code, but are themselves complicated when you want to pass information back and forth between the thread and the main UI code. If both threads try to update the same data things can get very nasty! Even if the UI thread just reads a data structure produced by the calculation thread (e.g. the progress per cent) it may be possible to read a partially written and inconsistent state. These problems can be dealt with if you are careful, but do require a different manner of thinking.

When producing applications, it is also easy to assume that this sort of delay can only occur when there is some obvious slow processing such as using a web service or very complex calculations. However, files may sit on network drives, so even reading a small file may occasionally take a long time (or a long time to fail) if there is some sort of network glitch. This is one of the reasons many programs occasionally hang for a short period and then continue as normal. So as you design interactive applications, whether in web or desktop, you need to be aware that these delays can happen and perhaps expect asynchrony to be the norm rather than the exception.

S2.4 Toolkits and widgets

As we discussed in Chapter 4, a key feature of WIMP interfaces from the user's perspective is that input and output behaviors are intrinsically linked to independent entities on the display screen. This creates the illusion that the entities on the screen are the objects of interest – interaction objects we have called them – and that is necessary for the action world of a direct manipulation interface. A classic example is the mouse as a pointing device. The input coming from the hardware device is separate from the output of the mouse cursor on the display screen. However, since the visual movement of the screen cursor is linked with the physical movement of the mouse device, the user feels as if he is actually moving the visual cursor. Even though input and output are actually separate, the illusion causes the user to treat them as one; indeed, both the visual cursor and the physical device are referred to simply as 'the mouse'. In situations where this link is broken, it is easy to see the user's frustration.

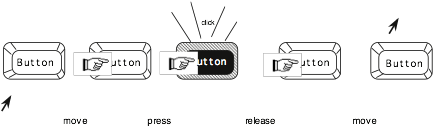

In Figure 8.16, we show an example of how input and output are combined for interaction with a button object. As the user moves the mouse cursor over the button, it changes to a finger to suggest that the user can push it. Pressing the mouse button down causes the button to be highlighted and might even make an audible click like the keys on some keyboards, providing immediate feedback that the button has been pushed. Releasing the mouse button unhighlights the button and moving the mouse off the button changes the cursor to its initial shape, indicating that the user is no longer over the active area of the button.

Figure 8.16 Example of behavior of a button interaction object

From the programmer's perspective, even at the level of a windowing system, input and output are still quite separate for everything except the mouse, and it takes quite a bit of effort in the application program to create the illusion of the interaction object such as the button we have just described. To aid the programmer in fusing input and output behaviors, another level of abstraction is placed on top of the window system – the toolkit. A toolkit provides the programmer with a set of ready-made interaction objects – alternatively called interaction techniques, gadgets or widgets – which she can use to create her application programs. The interaction objects have a predefined behavior, such as that described for the button, that comes for free without any further programming effort. In particular, many of the issues to do with state and display update described in the previous section get managed by the individual interaction objects, so that you, for example, just set the contents of a text widget and it worries about actually drawing the characters on the screen.

Toolkits exist for all windowing environments (for example, OSF/Motif and XView for the X Window system, the Macintosh Toolbox and the Software Development Toolkit for Microsoft Windows). In addition many programming languages provide another level of platform-independent toolkit (e.g. Java AWT/Swing) which allows programmers to create code to run on many different systems.

To provide flexibility, the interaction objects can be tailored to the specific situation in which they are invoked by the programmer. For example, the label on the button could be a parameter, which the programmer can set when a particular button is created. More complex interaction objects can be built up from smaller, simpler ones. Ultimately, the entire application can be viewed as a collection of interaction objects whose combined behavior describes the semantics of the whole application.

1. /*

2. * quit.c -- simple program to display a panel button that says "Quit".

3. * Selecting the panel button exits the program.

4. */

5. # include <xview/xview.h>

6. # include <xview/frame.h>

7. # include <xview/panel.h>

8. Frame frame;

9. main (argc, argv)

10. int argc;

11. char *argv[];

12. {

13. Panel panel;

14. void quit();

15.

16. xv_init(XV_INIT_ARGC_PTR_ARGV, &argc, argv, NULL);

17. frame = (Frame) xv_create(NULL, FRAME,

18. FRAME_LABEL, argv[0],

19. XV_WIDTH, 200,

20. XV_HEIGHT, 100,

21. NULL);

22. panel = (Panel) xv_create(frame, PANEL, NULL);

23. (void) xv_create(panel, PANEL_BUTTON,

24. PANEL_LABEL_STRING, "Quit",

25. PANEL_NOTIFY_PROC, quit,

26. NULL);

27. xv_main_loop(frame);

28. exit(0);

29. }

30. void quit()

31. {

32. xv_destroy_safe(frame);

33. }Figure 8.17 A simple program to demonstrate notification-based programming. Example taken from Dan Heller [169], reproduced by permission of O'Reilly and Associates, Inc

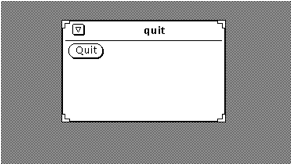

Figure 8.18 Screen image produced by sample program quit.c

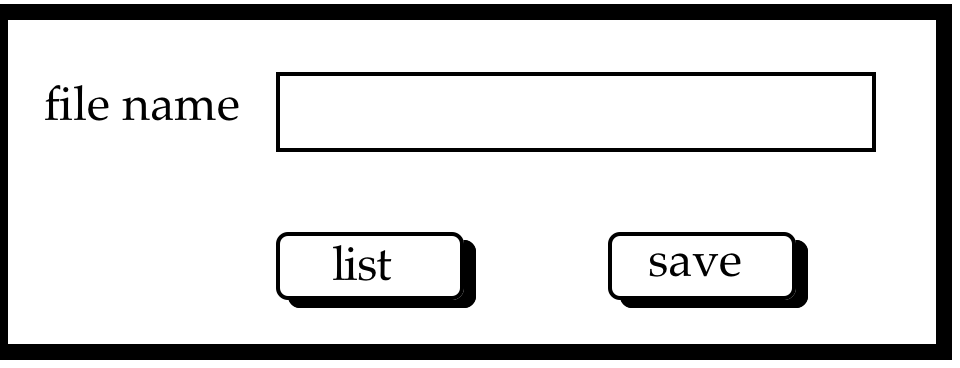

The sample program quit.c in Figure 8.17 uses the XView toolkit, which adopts the notification-based programming paradigm as described in Section 8.3.2. The program produces a window, or frame, with one button, labelled 'Quit', which when selected by the pointer device causes the program to quit, destroying the window (see Figure 8.18 for the screen image it produces). Three objects are created in this program: the outermost frame, a panel within that frame and the button in the panel (a PANEL_BUTTON interaction object). The procedure xv_create, used on lines 17, 22 and 23 in the source code of Figure 8.17, is used by the application program to register the objects with the XView notifier. In the last instance on line 23, the application programmer informs the notifier of the callback procedure to be invoked (the PANEL_NOTIFY_PROC) when the object, a button, is selected. The application program then initiates the notifier by the procedure call xv_main_loop. When the notifier receives a select event for the button, control is passed to the procedure quit, which destroys the outermost frame and requests termination.

The code describes what elements are required on screen and what to do when the 'Quit' button is pressed. However, it does not need to worry about the detailed interaction with the button in Figure 8.16. The button interaction object in the toolkit already has defined what actual user action is classified as the selection event, so the programmer need not worry about that when creating an instance of the button. The programmer can think of the event at a higher level of abstraction, that is as a selection event instead of as a release of the left mouse button.

In Chapter 7 we discussed the benefits of consistency and generalizability for an interactive system. One of the advantages of programming with toolkits is that they can enforce consistency in both input form and output form by providing similar behavior to a collection of widgets. For example, every button interaction object, within the same application program or between different ones, by default could have a behavior like the one described in Figure 8.8. All that is required is that the developers for the different applications use the same toolkit. This consistency of behavior for interaction objects is referred to as the look and feel of the toolkit. Style guides, which were described in the discussion on guidelines in Chapter 7, give additional hints to a programmer on how to preserve the look and feel of a given toolkit beyond that which is enforced by the default definition of the interaction objects.

Two features of interaction objects and toolkits make them amenable to an object-oriented approach to programming. First, they depend on being able to define a class of interaction objects, which can then be invoked (or instantiated) many times within one application with only minor modifications to each instance. Secondly, building complex interaction objects is made easier by building up their definition based on existing simpler interaction objects. These notions of instantiation and inheritance are cornerstones of object-oriented programming. Classes are defined as templates for interaction objects. When an interaction object is created, it is declared as an instance of some predefined class. So, in the example quit.c program, frame is declared as an instance of the class FRAME (line 17), panel is declared as an instance of the class PANEL (line 22) and the button (no name) is declared as an instance of the class PANEL_BUTTON (line 23). Typically, a class template will provide default values for various attributes. Some of those attributes can be altered in any one instance; they are sometimes distinguished as instance attributes.

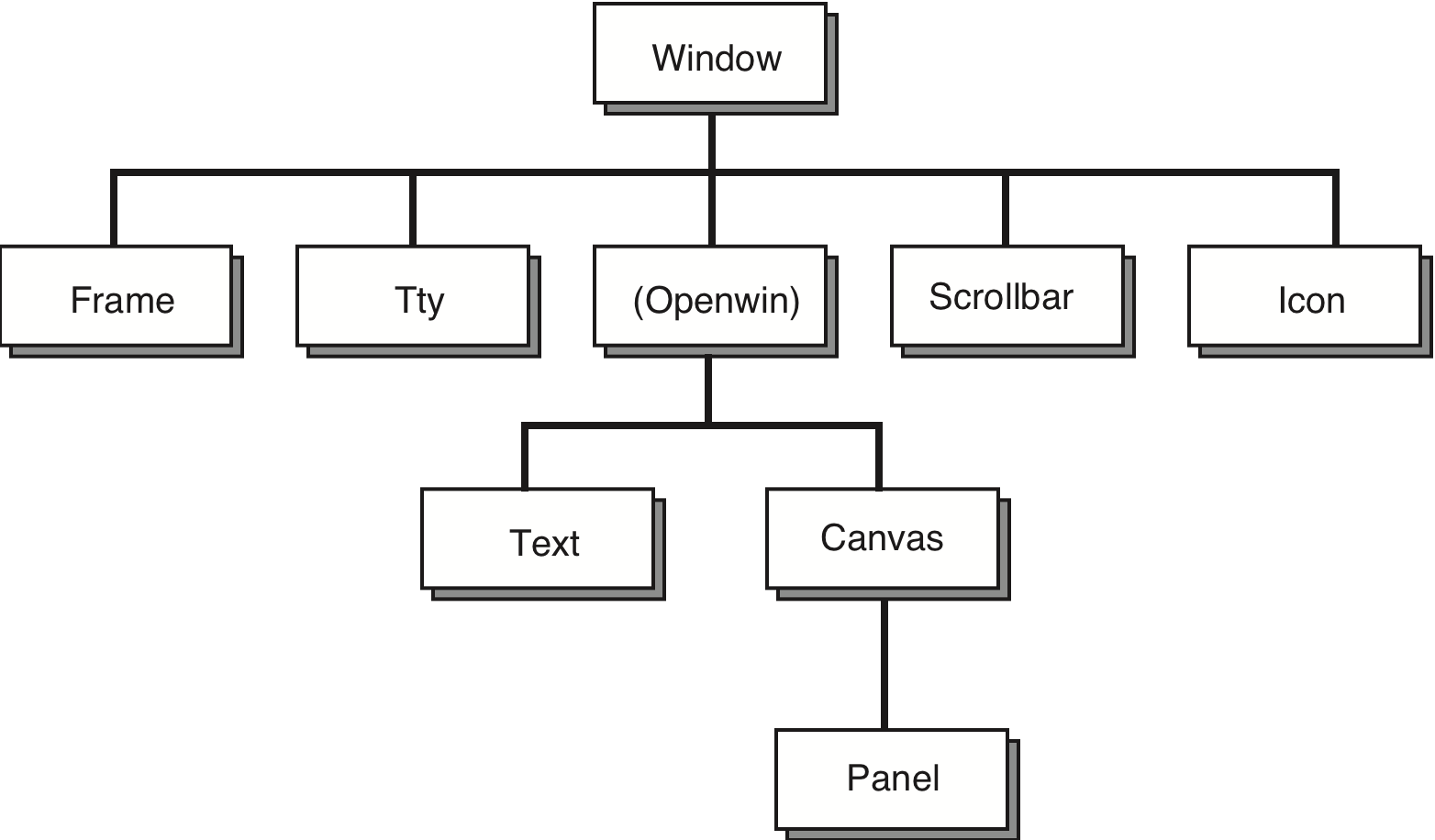

In defining the classes of interaction objects themselves, new classes can be built which inherit features of one or other classes. In the simplest case, there is a strict class hierarchy in which each class inherits features of only one other class, its parent class. This simple form of inheritance is called single inheritance and is exhibited in the XView toolkit standard hierarchy for the window class in Figure 8.19. A more complicated class hierarchy would permit defining new classes that inherit from more than one parent class – called multiple inheritance.

Figure 8.19 The single inheritance class hierarchy of the XView toolkit, after Heller [169], reproduced by permission of O'Reilly and Associates, Inc

We should point out that, though most toolkits are structured in an object-oriented manner, this does not mean that the actual application programming language is object oriented. The example program quit.c is written in the C programming language, which is not an object-oriented language. It is best to think of object orientation as yet another programming paradigm, which structures the way the programmer attacks the programming task without mandating a particular syntax or semantics for the programming language.

The programmer can tailor the behavior and appearance of an interaction object by setting the values of various instance attributes. These attributes must be set before the application program is compiled. In addition, some windowing systems allow various attributes of interaction objects to be altered without necessitating recompilation, though they may have to be set before the actual program is run. This tailorability is achieved via resources that can be accessed by the application program and change the compiled value of some attributes. For efficiency reasons, this tailorability is often limited to a small set of attributes for any given class.

Worked exercise

Scrolling is an effective means of browsing through a document in a window that is too small to show the whole document. Compare the different interactive behavior of the following two interaction objects to implement scrolling:

1. A scrollbar is attached to the side of the window with arrows at the top and bottom. When the mouse is positioned over the arrow at the top of the screen (which points up), the window frame is moved upwards to reveal a part of the document above/before what is currently viewed. When the bottom arrow is selected, the frame moves down to reveal the document below/after the current view.

2. The document is contained in a textual interaction object. Pressing the mouse button in the text object allows you to drag the document within the window boundaries. You drag up to browse down in the document and you drag down to browse up.

The difference between the two situations can be characterized by noticing that, in the first case, the user is actually manipulating the window (moving it up or down to reveal the contents of the document), whereas, in the second case, the user is manipulating the document (pushing it up or down to reveal its contents through the windows). What usability principles would you use to justify one method over the other (also consider the case when you want to scroll from side to side as well as up and down)? What implementation considerations are important?

Answer

There are many usability principles that can be brought to bear on an examination of scrolling principles. For example:

Observability The whole reason why scrolling is used is because there is too much information to present all at once. Providing a means of viewing document contents without changing the contents increases the observability of the system. Scrollbars also increase observability because they help to indicate the wider context of the information which is currently visible, typically by showing where the window of information fits within the whole document. However, observability does not address the particular design options put forth here.

Predictability The value of a scrolling mechanism lies in the user being able to know where a particular scrolling action will lead in the document. The use of arrows on the scrollbar is to help the user predict the effect of the scrolling operation. If an arrow points up, the question is whether that indicates the direction the window is being moved (the first case) or the direction the actual text would have to move (the second case). The empirical question here is: to what object do users associate the arrow – the text or the text window? The arrow of the scrollbar is more closely connected to the boundary of a text window, so the more usual interpretation would be to have it indicate the direction of the window movement.

Synthesizability You might think that it does not matter which object the user associates to the arrow. He will just have to learn the mapping and live with it. In this case, how easy is it to learn the mapping, that is can the user synthesize the meaning of the scrolling actions from changes made at the display? Usually, the movement of a box within the scrollbar itself will indicate the result of a scrolling operation.

Familiarity/guessability It would be an interesting experiment to see whether there was a difference in the performance of new users for the different scrolling mechanisms. This might be the subject of a more extended exercise.

Task conformance There are some implementation limitations for these scrolling mechanisms (see below). In light of these limitations, does the particular scrolling task prefer one over the other? In considering this principle, we need to know what kinds of scrolling activity will be necessary. Is the document a long text that will be browsed from end to end, or is it possibly a map or a picture which is only slightly larger than the actual screen so scrolling will only be done in small increments?

Some implementation considerations:

What scroll mechanisms does a toolkit provide? Is it easy to access the two options discussed above within the same toolkit?

In the case of the second scrolling option, are there enough keys on the mouse to allow this operation without interfering with other important mouse operations, such as arbitrarily moving the insertion point or selecting a portion of text or selecting a graphical item?

In the second option, the user places the mouse on a specific location within the window, and gestures to dictate the movement of the underlying document. What kind of behavior is expected when the mouse hits the boundary of the window? Is the scrolling limited in this case to steps bounded in size by the size of the window, so that scrolling between two distant points requires many separate smaller scrolling actions?

S2.5 Architecture and Frameworks

Despite the availability of toolkits and the valuable abstraction they provide programmers, there are still significant hurdles to overcome in the specification, design and implementation of interactive systems. Toolkits provide only a limited range of interaction objects, limiting the kinds of interactive behavior allowed between user and system. Toolkits are expensive to create and are still very difficult to use by non-programmers. Even experienced programmers will have difficulty using them to produce an interface that is predictably usable. There is a need for additional support for programmers in the design and use of toolkits to overcome their deficiencies. Also, none of the programming mechanisms we have discussed so far in this chapter is appropriate for non-expert programmers, so we still have a long way to go towards the goal of opening up interactive system implementation to those whose main concerns are with HCI and not programming.

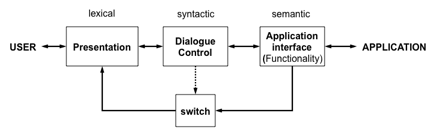

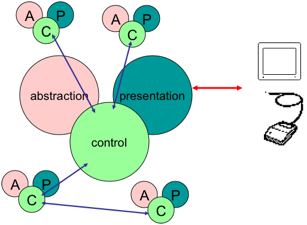

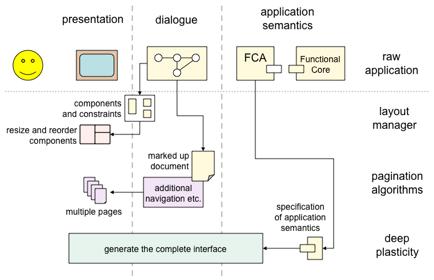

S2.5.1 Separation and presentation abstraction: Seeheim

Early in the days of user interface programming, it became evident that another level of services were required for interactive system design beyond the toolkit level. This lead to the development of what were then known as user interface management systems, or UIMS for short. Now-a-days other tersm suchThe term UIMS is now less widely used and many of the functions of UIMS are available as part of general IDEs (integrated development environments), such as NetBeans or Eclipse, either built-in or as a plug-in. However, many of the original concerns of UIMS are still important today:

- a conceptual architecture for the structure of an interactive system which concentrates on a separation between application semantics and presentation;

- techniques for implementing a separated application and presentation whilst preserving the intended connection between them;

- support techniques for managing, implementing and evaluating a run-time interactive environment.

A major issue in this area of research is one of separation between the semantics of the application and the interface provided for the user to make use of that semantics. There are many good arguments to support this separation of concerns:

Portability To allow the same application to be used on different systems it is best to consider its development separate from its device-dependent interface.

Reusability Separation increases the likelihood that components can be reused in order to cut development costs.

Multiple interfaces To enhance the interactive flexibility of an application, several different interfaces can be developed to access the same functionality.

Customization The user interface can be customized by both the designer and the user to increase its effectiveness without having to alter the underlying application.